Today, I’ll talk about my experience by using AB testing to increase digital innovations.

In this day and age, everything is becoming more digital, and data and speed are becoming more important than ever. Business leaders and IT professionals alike must distinguish between ideas and innovations that are promising and those that aren’t. AB testing is being used to help with this decision.

How and Why is AB Testing Used?

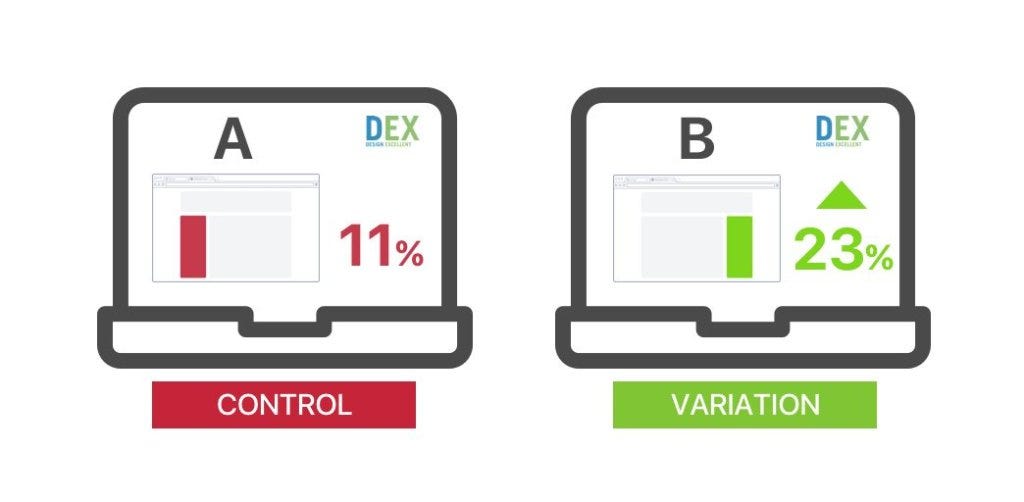

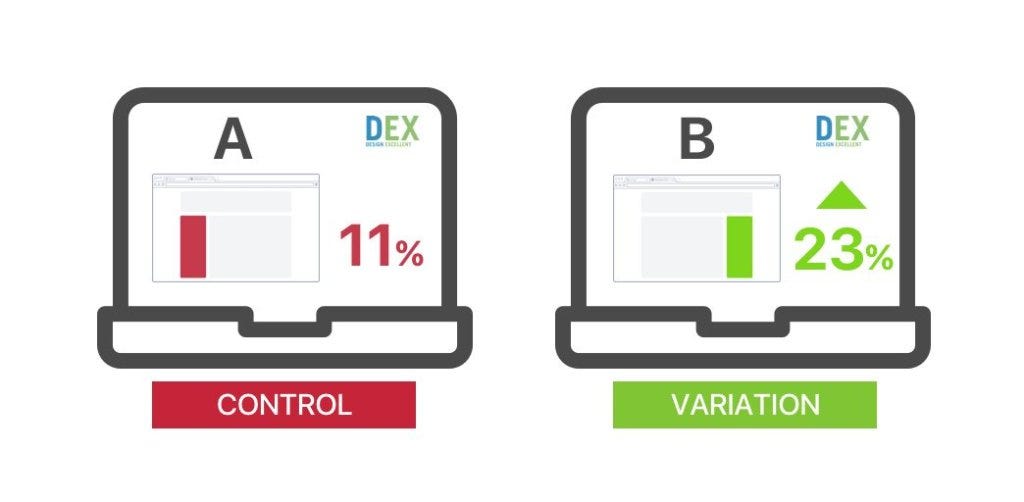

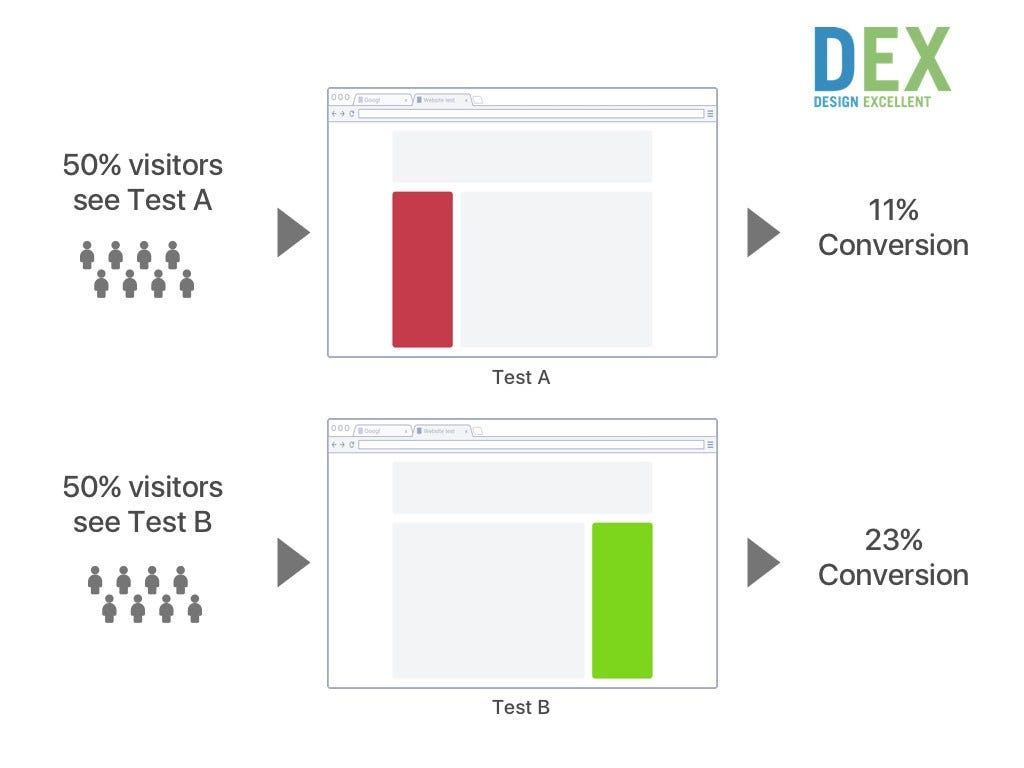

A/B testing, also known as split testing, allows you to compare two (or more) versions of something to determine which one works better. This is the method that most companies use to determine the best product price, the perfect website landing page and the correct design for a new app, to mention a few.

Beyond just being a comparison tool, AB testing examines in what way the behaviour of users change, and as such, grants designers the ability to change attributes of digital innovations with regard to individual preferences.

That’s not so difficult to do!

The easiest way is by releasing a few versions of the same “product” at the same time attains this changing of attributes. All of these versions differ in terms of small aspects.

For example: different positions of the call-to-action button, choice and colour of the font, and even the image size. As these varying versions are released simultaneously, the users’ reactions are recorded in order to discover which one they favour the most.

A/B testing is very powerful technique.

It’s specifically used to increase the conversion rate. i.e. customers buying the products, clicking links to advance to the next page, and even signing up for mailers, etc.

Research has proven that for B2B websites, using AB testing can increase leads by 30–40% and, for e-commerce sites there can be up to 20–25% more leads.

As such, AB testing should definitely form part of your strategy to increase conversions!

A/B Testing Picks Up Small Changes that Lead to Big Results

By using A/B testing, you are able to uncover relatively small changes, but the impact of these could be big (and sometimes even disastrous).

Do you want to know something cool?

Google does AB tests every 20 minutes on its algorithm for search in order to refine and further perfect search for web users.

You may wonder why AB testing should form part of every day life for these companies.

That’s a good point.

The answer is that more and more companies are making the move to digital, and by doing this, A/B testing becomes more affordable.

For chief information officers or IT directors, AB testing will be beneficial for a lot of the easy pickings, meaning that these tests are able to identify small modifications that might possibly lead to big behaviour changes, and thus even boosting the IT department’s impression throughout the whole building.

As such, it bears to be reiterated that small adjustments in design can cause major changes in users’ behaviour. These are called “Little Elephants” and are a good place to commence AB testing. The reason for this is that these tests are inexpensive and quick, and the results are persuasive. By testing different versions, it can be ascertained which small changes will turn into Little Elephants, because those ones will be on top.

A Case Study: The US Presidential Campaign

For the 2012 US Presidential Campaign, it was required that the media team convert visitors to the website into subscribers, and the focus would then be on converting the subscribers into possible donors.

At first, very few people clicked on the “Sign Up” button, and as a result, low conversion rates were recorded. The team then decided to use AB testing on certain parts of the website and to use the results of that to alter the website to achieve the best results.

It was roughly calculated that about 4 million visitors (of the 13 million subscribers) became subscribers due to the website being AB tested.

That’s impressive, isn’t?

Before US President Barack Obama’s re-election in 2012, his campaign team carried out a few hundred controlled experiments from emails to web pages. The aim was to increase campaign donations, and AB testing was the method chosen to perform these tests.

In that period of 20 months, the number of website visitors increased by nearly 50% and conversions by more than 150%. As many people would proclaim that these donations provided further support for Obama’s campaign, it could be argued that these AB tests aided in changing history.

There is one thing we can certainly say: AB and multivariate testing is vital for design that is hypothesis driven.

Other interesting case studies are:

- DHL case: the company achieved 98% conversion rate increase by changing general images and increasing the size of the contact form;

- Citycliq case: the company achieved 98% conversion rate increase by changing Value Propositions design;

- A list of 10 Kickass A/B Testing Case Studies from VWO: Link.

Using A/B and Multivariate Testing to Enhance UX

Leaders in the IT field should learn from Internet giants such as Amazon and Google, as these companies implements a lot more AB and multivariate testing than other, and deliver better than ever user experience (UX) and to increase the value of business.

This practice needs to be mastered, of course, especially in companies that want to match web-driven ones.

On the other hand, multivariate testing is more advanced than AB testing, because numerous alterations to a web page are (and can be) tested simultaneously. All of the modifications are amalgamated to form the prospective aggregates and then the different sets of users are exposed to these.

So what’s a multivariate test?

With a multivariate test you are performing a test of the elements inside one web page and not testing a different version of a web page like you are with an A/B test.

As a result, not one user sees the same combination of changes. By using statistical models, the best “mixture” of changes are determined and this is thus more effective than just making use of AB tests for each isolated change.

AB Testing Tools. How they work?

These tools are able to set up the tests, do the experiments and also authenticate the outcomes for the purpose of statistical significance. Frequently, the tools can also create content and design and also make front-end changes without any code. The aim of the AB testing tools is for smoother testing so that it doesn’t need any IT collaboration.

There are three different forms for these testing tools:

- The first form is integrated into web content management or portal offerings.

- Secondly, it forms part of prevailing offerings of web analytics.

- The last form is a stand-alone solution.

Web applications are not simple systems, and the outcome of a system change cannot unquestionably be predicted.

However, by examining the behaviour of web users through the use of tools such as web analytics, you can gain an understanding of what happened. If a controlled experiment is implemented correctly, then only will you be able to understand the why of what happened.

Web analytics tool are not the only ones. Heatmap software, like Crazyegg,Inspectlet, Clicktale and Mouseflow, are great tools for tracking the behaviour of the visitors on your site.

It is important to note that you can basically test anything in an application or on a website.

Every range of tests entails varying resources from different organisational departments. You will find that some tests require custom development, as there is a limit to what AB tools can facilitate. As an example, you would need to involve developers and stakeholders if you want to test new pricing points or conceptually examine different UX.

Here a great list of tools made by CoversionXL: Link

How to run a very effective A/B testing?

Step 1: Decide what you want to test

Let’s talk clear. You can use AB testing for virtually anything, from things like pricing products, developing mobile apps, and creating the perfect landing page.

This method of testing is also highly recommended when new user experiences are introduced as companies cannot and should not bargain on existing knowledge of the behaviours and predilections of users when choosing and implementing designs.

What you are trying to achieve is what will inform the basis of the test. As an example, a company might be trying to raise the number of e-commerce purchases. Thus, they might decide to test different elements, like:

- CTA, call-to-actions;

- Copywriting;

- Forms elements; i.e. how lengthy the fill-in form is;

- User Flow; i.e. too many steps to complete the purchase? Can it be shortened in any way?

- Product stuff; i.e. pricing, photos, description, titles;

- Trust Builders; i.e. how the data security policy is displayed.

Step 2: Create the A/B Test

The main objective of the test is to separate the elements that impedes users from “closing the deal”, i.e. doing what you intended for them to do, which can range from making a purchase to subscribing to a newsletter. As in the example above, these factors might also be how many steps there are to completing a purchase, etc.

Changing design components, one by one, can be used to test every variable and these changes, in the form of different versions of that web page, can be tested on actual users.

By managing the IP address, you are able to release different versions to new users and document things like the click-through rate, how long the user spend your page (Check out this nice Test Duration Calculator by VWO), and what actions were (and were not) taken.

It’s up to you to see what the winning combination is, and release that version for maximum effect and results.

Step 3: Avoid common pitfalls

In order to overcome the most common A/B testing drawbacks, here are a few ways for optimising A/B tests:

- Run the tests simultaneously — Always! By running the tests at the same time, you eliminate the possibility of certain circumstances in a point of time affecting the end result.

- Be attentive to statistical confidence. Use an online tool such as Visual Website Optimizer that includes features that calculates statistical confidence, which is the point at which the results of the test are noteworthy, i.e. they are not the outcome of random chance.

- Don’t let your instincts overrule the test result. The goal is to attain high conversion rates, and sometimes the winning option is not the intuitive one. A/B testing mainly focuses on small tweaks and not on what is called game-changing innovations. Therefore, A/B testing should not be the only way or principal way to get an innovation investment; rather, it should be added to the already existing innovation arsenal.

Lastly, A/B testing can lead the way for more experimentation in that the outcome for a new approach can be an improvement on the old once it is A/B tested.

But the advantage of A/B testing here is that is shows you by how much the new is better than the old, rather than in just presuming it is better (because it is newer). So, implement more A/B testing and follow what the Internet giants are already doing; it might possible change your business results for the better!

Analysis

A true AB test endeavours to factor out all the other elements that could potentially affect the outcome. In this way, the test only reveals the statistically significant results.

For external factors to not have an impact on the result, the two varying versions need to be tested simultaneously.

The test should also not be changed until it has been exposed to a statistically noteworthy amount of users. If this “rule” is not followed, then the variance between the versions might result from adventitious fluctuations. It might be tempting to test every permutation; however, this would only work for the biggest websites.

Here are the four steps to structurally do AB testing:

- Step one is to question. After all, every test has to begin with this. Questions such as “Why don’t people click on the newsletter subscription button?” are good because you want to identify the user action that you want and the criteria for success, i.e. the singups and the number of signing up must grow.

- The second step is to hypothesise answers for all the questions. Using the above example, some possibilities are: “We require too much information so the user doesn’t sign up” and “The signup form is not visible enough (or at all) to the user.” In this case, the latter is the priority. When you have identified the hypothesis, you can start to design the experiment.

- The third step is to experiment as the hypothesis needs to validated or invalidated. Again using the example above, you need to make the sign up form more visibly prominent.

- Fourth is to analyse. If the hypothesis has been validated through the experiment, then you can implement the change. On the other hand, an unvalidated hypothesis wasn’t a time-waster; you would know the form was sufficiently visible, but there are other reasons that users didn’t sign up. You need to rethink the hypothesis and do another test. Importantly, make sure that every failed experiment is documented and shared with the team so that they can attain information about the solution and also the users.

Some recommendations would be to:

- Design questions that simplify what is being tested and what the success criteria are. The desired user behaviour also needs to be identified.

- Think of more than just one hypothesis that could answer the question, and select the one that could have the largest effect.

- If the hypothesis is not validated, ensure that the outcome is recorded and conveyed to the rest of the team.

Getting Statistically Significant Results

The most crucial part of any AB and multivariate test is to obtain statistically significant outcomes. Long testing cycles is often the result if tests have been designed wrongly. If this happens, then it will also take you a very long time to produce results. There are several factors that will have an effect on the time you will need to run the test for. There are:

- Visitor number: You need to look at how many users, on average, visit the page(s) while the test runs.

- Existing goal to success ratio: Then examine how many of these users are taking the action you want them to take before you ran the test.

- The real performance on the modification you are testing: Statistical significance will be achieved at a faster rate if the difference in the ratio is big.

- How many variations were tested: AB testing usually only tests two variations at a time, while the multivariate method can test many more.

- Being comfortable with the statistical confidence: The statistically significant outcome of the test can be seen in a confidence interval. Sometimes an 80% confidence is all right, and at other times (for other tests) a 95% confidence is preferred. The factor that determines this statistical confidence percentage is how much it will cost you to get it wrong; for example, a higher confidence should be set for more critical factors that are being tested.

Tests should be kept contained and small when you tests content and do visual tests. It is recommended to create the test on a part of one page and the actual modification and objective should be near each other. Multiple tests can also be undertaken simultaneously, but they cannot be dependent on each other. As such, it is required that they be on separate pages, so there is no interference.

Which Test Won is a nice tool to see which variation has been more successful.

Some recommendations are:

- Attention should be placed on the cumulative effects of more than one test; as such, don’t bank on excellent results from only one test.

- Know and understand how statistical significance is computed. Create the tests so that they run for the minimum time they can.

- It is good to release a few small tests to run simultaneously rather than just a big one.

- Be conscious of when the local maximum is approached; that is, when AB testing produces diminishing returns. You need to increase the reach of the test structurally, conceptually and capability-wise so that the possible performance of the solution is increased.

Design a Procedure to Execute Modifications from Favourable Experiments

Many AB testing tools functions by altering the code using JavaScript, but tests that are successful should still be implemented in the base of the code. These tools are vital for when the tests are conducted, but you cannot rely on it to modify more content after it has been given to the client.

This will result in performance issues and also governance mistakes since the different elements are still in the different systems.

In order to obtain the best effect from using AB tests, outcomes that are successful should be implemented soon after the test is complete and be continuously deployed.

Using agile development processes and having the ability to steadily deliver are big benefits when large-scale AB testing is being done.

Once the implementation and deployment has been done, it needs to be shared with the team.

Furthermore, to make sure that good performance is ongoing, the tests have to be deleted from the AB testing tool. As such, good communication is required from the A/B testing team and the developers to ensure there is no confusion.

How to ensure good communication? Some tips:

- The development team needs to be organised in an agile way. This allows for the implementation of successful tests once the results have been verified.

- Steady deployment is needed to implement the results as quickly as is possible. You don’t want the AB tool to turn into another web content management system.

- The tests should not be afflicted by changes in the front-end code. Thus, good communication is called for between the developers and the team conducting the AB tests.